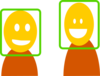

HUFA detects and tracks frontal and profile faces within videos.

Overview:

This service first performs a frame-by-frame face detection using the the approach of [1] and [2] with local binary (LBP) features, using the implementation from OpenCV2. The detected faces which overlap within a single frame are fused by taking the average of their positions.

Second, for every shot specified in the input, the detected faces are grouped in classes according to their spatial overlap over the frames, and a Kalman tracker is performed on every class to smoothen the position of its elements over time.

[1] Viola P. and Jones M. J., "Rapid Object Detection using a Boosted Cascade of Simple Features". In Proc. of IEEE CVPR, 9 pages, 2001.

[2] Lienhart R. and Maydt J., "An Extended Set of Haar-like Features for Rapid Object Detection". In Proc of IEEE ICIP 2002, Vol. 1, pp. 900-903, 2002.

File formats:

HUFA takes two files in input: a video file and a JSON file containing an estimation of its shot boundaries. It outputs the position of the detected and tracked faces over the video by updating the JSON file in input, and a raw text file.

- inputs:

-

video file: many video formats are supported as OpenCV relies on FFMPEG libraries for reading videos.

-

shot detection file in JSON (required): HUFA's face tracking process relies on estimated shot boundaries, stored in a file with the format of Vidseg's JSON output. Such a file, named "<video_file_name>.json" and which associates the shot boundaries to the "vidseg" attribute, will be updated with the results of HUFA.

- outputs:

- JSON file <input_file_name>.json with the following content:

{

"general_info":{

"src":"<input_file_name>",

"video":{

"duration":"<time_in_hh:mm:ss_format>",

"start":"<temporal_offset_in_seconds>",

"format":"<format>",

"resolution":"<width>x<height> pix",

"frame_rate":"<frame_rate> fps",

"bit_rate":"<bit rate> kb/s",

"color_space":"<color_space_ref>"

}

},

"vidseg":{

"annotation_type":"shot transitions",

"system":"vidseg (<profile>)",

"parameters":"<input_parameters>",

"modality":"video",

"time_unit":"frames",

"events":[

{

"start": <frame_num_start>,

"end": <frame_num_end>,

"type": <type_of_transition>

},

{

"start": <frame_num_start>,

"end": <frame_num_end>,

"type": <type_of_transition>

},

...

]

}

"hufa":{

"annotation_type":"face positions over time",

"system":"hufa",

"parameters":"<input_parameters>",

"modality":"video",

"time_unit":"frames",

"events":[

{

"start": <frame_num_start>,

"end": <frame_num_end>,

"type": <group_of_faces_index>,

"positions": [

[

<frame_number>,

<x1>,

<x2>,

<y1>,

<y2>

],

[

[

<frame_number>,

<x1>,

<x2>,

<y1>,

<y2>

],

...

},

{

"start": <frame_num_start>,

"end": <frame_num_end>,

"type": <group_of_faces_index>,

"positions": [

<frame_number>,

<x1>,

<x2>,

<y1>,

<y2>

],

...

},

...

]

}

}

The value associated to the "positions" attribute is an array listing all the faces' frame number and positions of the related group of faces, i.e., a single tracked face. Points (x1,y1) and (x2,y2) respectively refer to the coordinates of the top-left and the bottom-right corners of the rectangles delimiting the detected faces. Note that no clustering is performed over the groups of faces, i.e., every group of face has a different <group_of_faces_index>. <frame_num_end> and <frame_num_start> are the numbers of frames respectively associated to the beginning and the end of the associated group of faces.

- text file: the value of the first line is the number of frames of the input video, and every other line of the file gives the position and the index of the faces detected and tracked within a single frame. The following format is used:

<total_number_of_frames>\n

<frame_index>\t<x1>\t<y1>\t<x2>\t<y2>\t<group_of_face_index>\t<x1>\t<y1>\t<x2>\t<y2>\t<group_of_face_index>...\n

<frame_index>\t<x1>\t<y1>\t<x2>\t<y2>\t<group_of_face_index>\t<x1>\t<y1>\t<x2>\t<y2>\t<group_of_face_index>...\n

...

The positions and group of face indexes are the same than the JSON file. Every frame is represented: lines which only contain a single value are related to frames where no face was detected.

Credits and license:

HUFA was developed by Gabriel Sargent in IRISA/Inria Rennes-Bretagne Atlantique.

It uses OpenCV 2.4.11, released under a BSD license, and FFMPEG, released under the GNU LGPL2. The program performing the initial face detection is inspired from A. Huaman's tutorial from OpenCV.

22/08/2017 : Version 1.0,

How to use our REST API :

Think to check your private token in your account first.

You can find more detail in our documentation tab.

This app id is : 81

This curl command will create a job, and return your job url, and also the average execution time

files and/or dataset are optionnal, think to remove them if not wanted

curl -H 'Authorization: Token token=<your_private_token>' -X POST

-F job[webapp_id]=81

-F job[param]=""

-F job[queue]=standard

-F files[0]=@test.txt

-F files[1]=@test2.csv

-F job[file_url]=<my_file_url>

-F job[dataset]=<my_dataset_name> https://allgo.inria.fr/api/v1/jobs

Then, check your job to get the url files with :

curl -H 'Authorization: Token token=<your_private_token>' -X GET https://allgo.inria.fr/api/v1/jobs/<job_id>

![]() gabriel.sargent@irisa.fr

gabriel.sargent@irisa.fr